Utilization. Latency. Throughput. In the world of public cloud computing, we’re bombarded by metrics of how well resources are being used. But one goal is rarely discussed: how to make cloud workloads efficient.

Let’s agree on this working definition: Efficiency is a measure of the amount of energy you get out of something compared with the amount of energy you put in. Take the example of a water heater: In home-improvement stores, you can get one that is 85% efficient or another that’s 92% efficient. But how do manufacturers know that ratio? They test the amount of heat they put into a device and measure the heat transferred to the water that comes out.

With cloud computing, inputs and outputs are much harder to measure—and few are within your control. You can’t decide how much electricity a particular cloud provider’s regional data centers use to power their services, but you can choose which types of services you use within those regions. And since those services are often priced according to how efficiently they can be operated, you can use price as a proxy for efficiency.

Design your applications for efficiency—and profitability

What you can control is the efficiency of your application designs. For example, let’s say your cloud application runs on dedicated compute and database instances, using block storage. You’re getting the best rates for those services by pre-purchasing reserved capacity. So your application is “cost-optimized”—but is it efficient?

That depends on how well-matched your architecture is to actual demand. Does your application need 24×7 availability? Could it use cloud functions or a serverless design or does it require traditional compute power? Does it require a traditional database, or might it be equally well-served using a less stateful model? As for storage, does it all need to be available instantly, or could some be moved to object storage, taking advantage of less frequent access offerings?

Consider the following illustration of changes in monthly cloud costs over time, with a focus on optimization (left) versus efficiency (right):

In the graphic above, two alternate scenarios are depicted. On the left, services and resources are optimized, while on the right the application is rearchitected. Two key categories, compute and serverless, are highlighted. In the left graph, the costs of all services decrease over time, but compute remains a consistent portion of overall cloud spending. In the right graph, it drops out entirely in favor of serverless. Both designs end up costing the same.

Efficiency drives better business output

But the key is that the business output (see dotted line) with the new design is so much better. Getting more out of the application at the same level of spend is a marked uptick in efficiency. Such strategic changes can drive quantum leaps in business results—and profitability.

Modern cloud computing permits design paradigms that simply weren’t available in the capex-heavy, on-premises world. Substantial gains in efficiency can be achieved by rethinking applications from the ground up, taking advantage of massively scalable, serverless, decoupled designs.

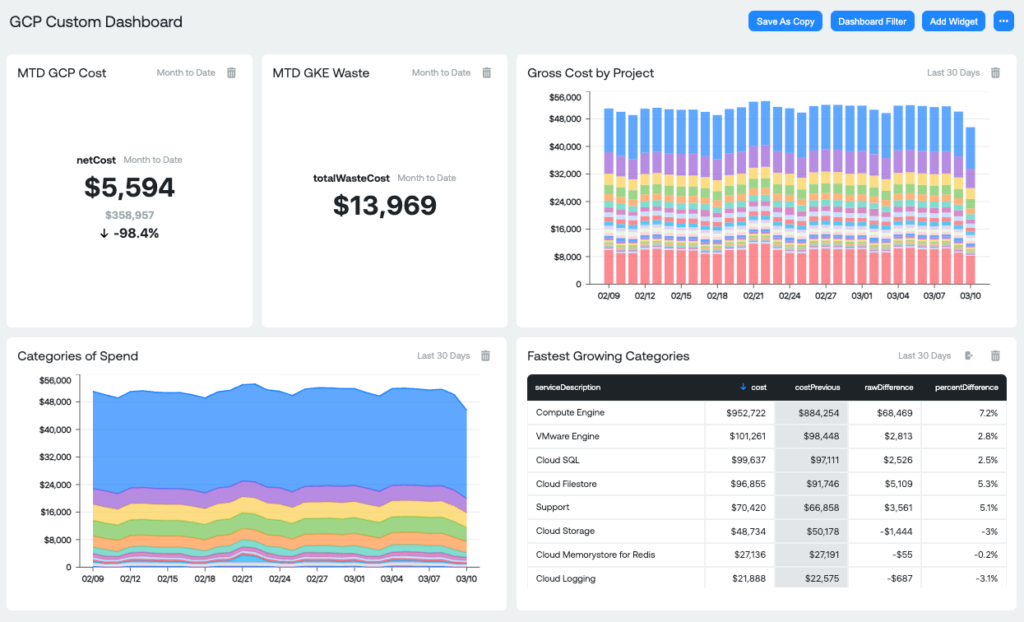

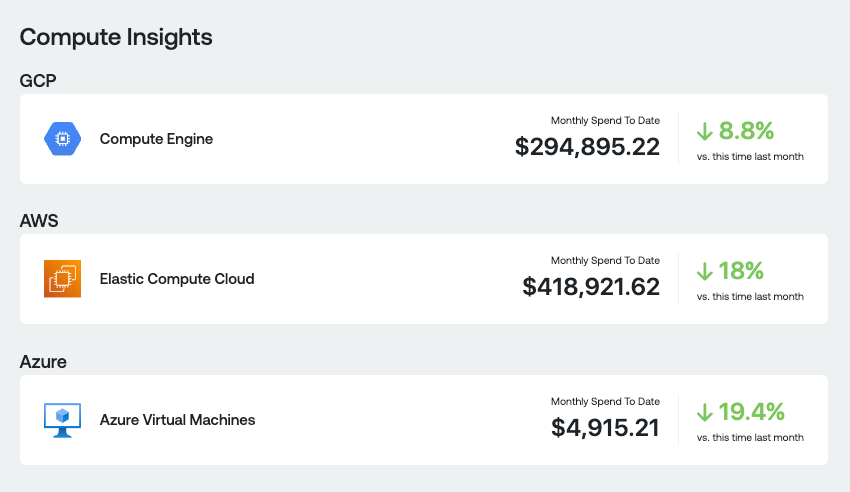

Throughout your FinOps journey, Ternary can help you identify how to make cloud workloads more efficient: where you are over-provisioned and where your workloads don’t match the resources on which they are deployed.

Ternary identifies opportunities for improving efficiency

Our platform can help surface opportunities for rearchitecting and track improvements over time. (Read more about how Ternary can help with workload and rate optimization here.) And while the upfront redesign work can feel like an insurmountable hill, the payoff in the end is well worth the effort.

Learn how Ternary can help you analyze the efficiency of your cloud strategy.