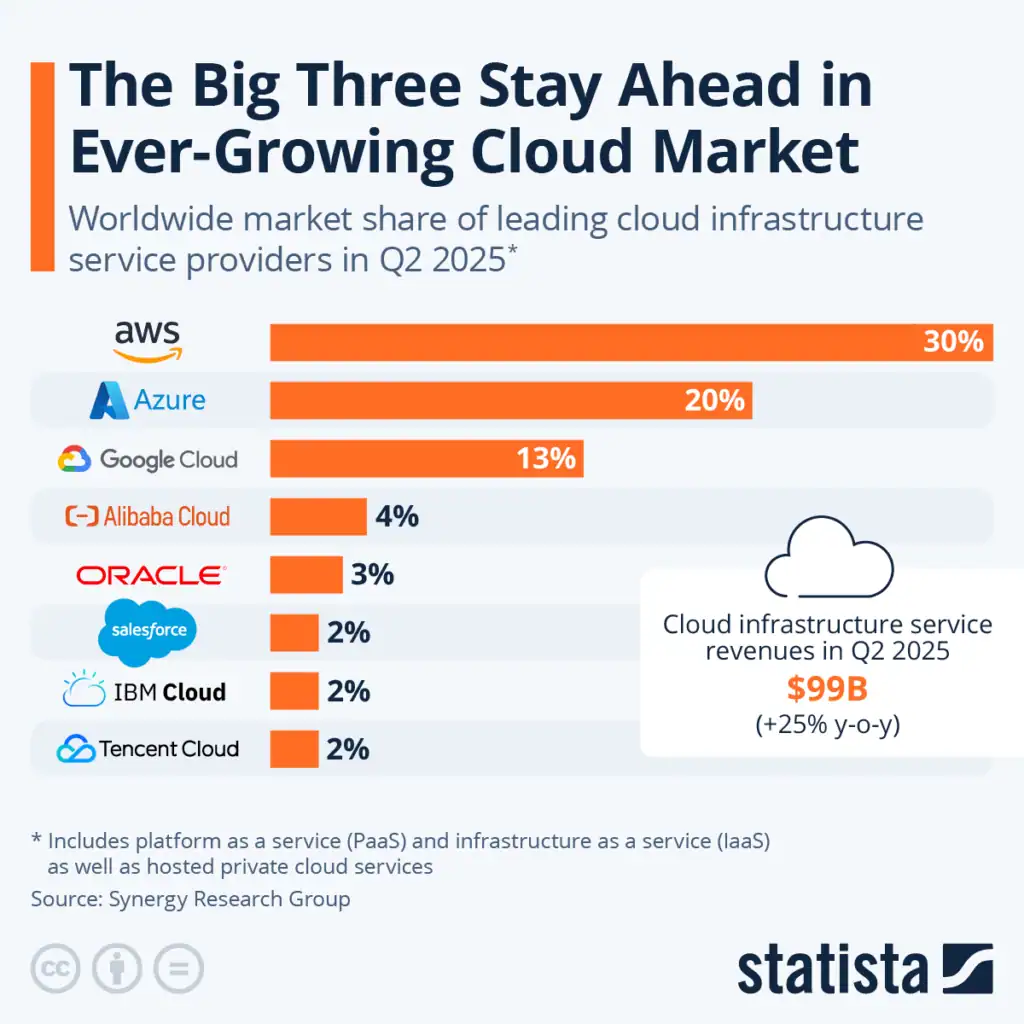

Cloud costs consume a significant portion of the IT budget because more organizations are migrating their infrastructure to cloud platforms like Amazon Web Services (AWS). But why does cloud, especially AWS cloud, have to cost so much? Is it like this by design, or is it a matter of usage and architecture choices teams make?

First, you should know that a high AWS bill isn’t inevitable. It’s quite avoidable, thanks to the many AWS cost optimization strategies available.

In this guide, we’ll explore the top 6 strategies. But, first, let’s get an overview of AWS cost optimization and also see whether AWS is actually expensive or just feels like it.

What is AWS cost optimization?

In most organizations, cost is a behind-the-scenes finance concern.

AWS cost optimization changes that by bringing cost consciousness right into the architectural and development process.

And since Elastic Compute Cloud (EC2) instances are typically the largest line item on an AWS bill, they are given the most attention.

The strategies to bring about AWS cost savings mainly involve moving away from the default on-demand pricing model towards commitment-based discounts like Reserved Instances and Savings Plans.

But besides compute, cost optimization in AWS also scrutinizes every service and asks whether its cost contributes to a performance improvement or a core business goal.

If a complex managed service is delivering a better value than a simpler and more integrated option, it’s the right tool. If it’s not bringing more value, you stick with the integrated option.

All in all, AWS cloud cost optimization ensures every component of your architecture is purposeful and efficient.

Why is AWS so expensive?

- AWS’s default on-demand rates command a premium for their sheer flexibility. But even its Reserved Instances or Savings Plans feel expensive because they’re presented in a way that many find more confusing than the standard offerings from other providers.

- Following industry trends rather than business necessity, teams often end up deploying complex, expensive services for problems solvable with cheaper AWS primitives.

- In the rush to be cloud native, teams make non-optimal use of monolithic third-party applications.

- The desire for massive, instantaneous scalability leads to the adoption of services for applications that will never see that kind of load.

The top 6 AWS cost optimization strategies

The following are the top six AWS cost optimization strategies that you can implement to keep your AWS costs under control.

1. Identify and delete orphaned snapshots

A quick AWS optimization win comes from tracking down and deleting initial Elastic Block Store (EBS) snapshots that accrue a steady monthly storage fee long after their purpose has expired.

It’s easy to assume you’ve cleaned up storage just by terminating an EC2 instance and its associated EBS volume. But that assumption is wrong.

The snapshots you created as backups still remain quietly in Amazon S3. Especially the initial full volume snapshot hogs the most storage because the entire chain of subsequent snapshots depends on it.

You can automatically manage snapshots by defining policy-based lifecycle rules for them.

The Amazon Data Lifecycle Manager allows you to define policy-based lifecycle rules for snapshots to:

- Automatically archive older snapshots to cheaper storage tiers.

- Systematically delete them after a set period.

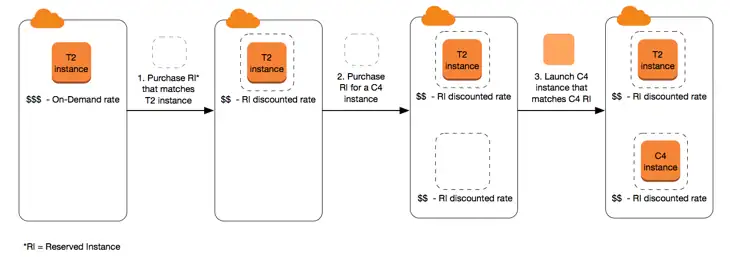

2. Purchase Reserved Instances

As we mentioned earlier, the leap from on-demand to Reserved Instance (RI) pricing is the first major step most teams take.

An RI provides a discount of up to 72% in some cases, in exchange for a one or three-year term.

But even this mechanism fully benefits you when you have performed sound planning.

For instance, purchasing Standard RIs for a project that, let’s say, decommissions eight months into the term is only going to create a sunk cost continually draining the budget.

The fix to this is choosing the appropriate RI:

- Choose a Standard RI for stable and predictable workloads.

- If you anticipate change, choose a Convertible RI for its flexibility to modify the instance attributes as your needs evolve.

In case there’s still an idle reservation with no internal use, sell it to another AWS customer on the Reserved Instance Marketplace. That way your investment isn’t lost.

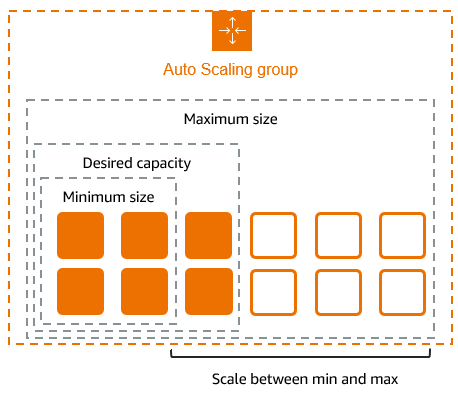

3. Implement intelligent auto-scaling

Perhaps the most beneficial of AWS cost optimization strategies is aligning your spending directly with actual usage instead of hypothetical peak capacity. In the cloud, we call this auto-scaling.

The unique selling point of the cloud has always been elasticity. Yet, many teams over-provision static fleets of servers for their average load just to handle occasional traffic spikes.

This is like leaving the lights on in every room of a building all night in case someone needs to get a glass of water.

So auto-scaling is the definite strategy here.

To further improve this strategy, incorporate Spot Instances for fault-tolerant workloads. Spot Instances bid on spare EC2 capacity at a fraction of the cost.

But this will also require regular reviews of scaling activity reports and performance data to make sure they’re as lean as possible.

AWS cost optimization tools can suggest more cost-effective instance types you may have overlooked.

4. Eliminate underutilized resources

Underutilized resources, such as EC2 instances, belong to projects that ended or were sized for a workload that never materialized.

By sitting idle, they waste your budget with every hour they run.

That’s because if you provision a, for example, 12 GiB memory instance, you pay for all 12 gibibytes. It doesn’t matter whether your application uses just two or all twelve.

But as much as this over-provisioning is persistent, it is equally an avoidable driver of a high AWS bill.

The only challenge is identification of underutilized infrastructure. You can leverage third-party FinOps platforms like Ternary to identify underutilized infrastructure across compute, databases, storage, and more.

There are three ways to deal with underutilized resources to reduce AWS costs:

- Terminate if entirely idle or obsolete

- Stop if potentially needed later (switching to only paying for storage)

- Rightsize to a smaller instance type

5. Explore Compute Savings Plans

AWS’s Compute Savings Plans let you pay for a consistent amount of compute usage (at a $/hour rate) instead of specific instance types.

With them, you can reduce your bill by up to 66% across a range of compute services, including EC2, AWS Fargate, and Lambda.

In a way, this is a blanket discount over your entire compute environment.

However, this flexibility also comes with a risk. For businesses with highly fluctuating demand or those in a state of rapid transformation, an overestimated commitment can cause overpayment if actual compute needs fall short.

Therefore, teams should use Savings Plans only after establishing a reliable baseline of historical usage.

6. Optimize with Amazon S3 storage tiers

It’s common and natural to access some files constantly and some rarely after their initial creation.

So storing all your files on the highest-performance storage tier doesn’t make sense. Yet many teams take this costly misstep.

For most organizations, the low-hanging fruit is implementing intelligent Amazon S3 bucket policies that account for nuances.

They can use native AWS tools like S3 Analytics to analyze access patterns and recommend which objects are candidates for a lower-cost storage tier.

After analysis comes automation through Lifecycle Policies.

In this stage, rules can be set based on file-access frequency to perform any of these two actions:

- Automatically transition objects to cheaper tiers after a defined period

- Archive objects to Glacier for long-term retention

Teams wanting a fully hands-off solution can use S3 Intelligent-Tiering, which automates both analysis and transfer of data between access tiers based on changing patterns.

Pillars of AWS cost optimization

Rightsize

This pillar says that the resources you provision must match your actual workload requirements. Any resource that is over-provisioned, underutilized, or completely idle should be dealt with appropriately.

Increase elasticity

Also mentioned in AWS cost optimization strategies above (auto-scaling), this pillar refers to dynamically scaling systems to meet fluctuating demand. It opposes the traditional IT infrastructure, which keeps running even during periods of zero demand.

Leverage the right pricing model

AWS offers a variety of pricing models to match your workload’s behavior. To further reduce AWS costs with them, you can create an intelligent blend of commitments that don’t sacrifice performance or reliability.

Optimize storage

This pillar is about choosing the right storage tier for your workload based on the following factors:

- File access method

- File access frequency

- Latency

- Required throughput

- Data availability

Measure, monitor, and improve

A cloud environment is a dynamic ecosystem and thus demands a continuous feedback loop. This loop has the following requirements:

- Consistent cost allocation tagging

- Regular reviews of cost efficiency metrics and KPIs

- Building a culture of cost awareness

- Assigning developers the responsibility to make cost-conscious architectural decisions

AWS cost optimization best practices

Here are some of the best practices you should keep in mind before you begin optimizing costs for AWS.

Gain visibility into your cloud spend

Many organizations encounter bill shock simply because they lack visibility into their cloud spend. And as we know, a foundational principle of cloud cost management is that you can’t manage what you can’t see.

So, the first step of cost optimization in AWS should be gaining single-pane-of-glass visibility into who is using what resources, for which projects, and at what cost.

If AWS’s native tools don’t offer the level of visibility you need, turn to third-party FinOps solutions like Ternary.

Select the right AWS region

The AWS region you choose influences latency, cost (prices vary between regions), application availability, and compliance.

So you cannot choose a region on autopilot, and strictly not based on just one factor (which is commonly latency).

A better approach is deploying across multiple regions in order to create a more resilient architecture for disaster recovery.

Commit to continuous optimization

AWS keeps releasing new services, features, and pricing models that can bring further efficiencies, but also inefficiencies.

So make sure you perform regular reviews and accordingly implement the two strategies:

- Incremental optimization: You implement new and more efficient resource types

- Architectural optimization: You replace entire components with new and more cost-effective services.

Final thoughts

After learning all these AWS cost optimization strategies, if you’re still having challenges with optimizing your AWS costs, it might be time to evaluate a third-party solution like Ternary.

Ternary helps finance, FinOps, and engineering teams make informed decisions based on real-time cost data and actionable insights.

Try Ternary today.