Last week, I had the pleasure of attending my second FinOps X conference in San Diego, California. As advertised, FinOps X was a wonderful opportunity to enjoy the company of the many friends I’ve made in the FinOps community. If you’re not familiar with the particular vibe that FinOps X cultivates over other conferences, I would say a close comparison is being an active part of a small Reddit or other online community and then suddenly deciding to take a cruise together for two days. You constantly meet and catch up with people you know from around the world, and you can match names to faces of individuals you may have talked to extensively online but never met. Not only do you engage in casual conversations, but you also share meals and exchange innovative ideas with each other. It’s definitely unlike any technology conference I have ever been to.

On the conference’s first full day, I presented a breakout session with my co-founder, Sasha Kipervarg, sharing Ternary’s AI journey. For those who were able to attend (quite a few of you!) I sincerely thank you for your time and hope you learned something from our story. For those who weren’t able to attend, I’d like to summarize a few of the key takeaways from our talk.

It’s okay to be skeptical of new and powerful technology

When the AI wave first hit, we largely dismissed it. During that time, our main focus was making our product a reliable FinOps analytics and reporting platform. We saw risk in the first wave of large language models (LLMs) due to their propensity to hallucinate. Accordingly, we wouldn’t want the Ternary platform to hallucinate thousands of dollars of spending or millions of dollars of savings that don’t exist. Where we saw value was in machine learning algorithms tuned for generating insights in time series data, such as ARIMA and Prophet. Overall, we saw LLMs as a flash in the pan that could be useful for businesses other than ours, but weren’t a fit for our product. We thought it would be disingenuous to simply jam chat functionality into our platform just to keep up with the latest industry trend.

I still think these feelings were valid and the right course of action at the time. But we made one mistake: we didn’t experiment enough with what was there. We jumped to conclusions about how the technology was not suited for our business. And we didn’t circle back to see how things had progressed for longer than we should have.

During the live breakout, Sasha and I polled the audience on their AI adoption stages. Interestingly, no one in the room raised their hand when we asked if they were still skeptical of AI. Instead, the majority of attendees indicated that they have AI in R&D or in production. Indeed, there has been a flurry of activity in the market over the past six months, and we, too, now believe that LLMs are ready for prime time. So, how did we adopt LLMs? The answer may surprise you.

To master technology, we must integrate it into our daily lives

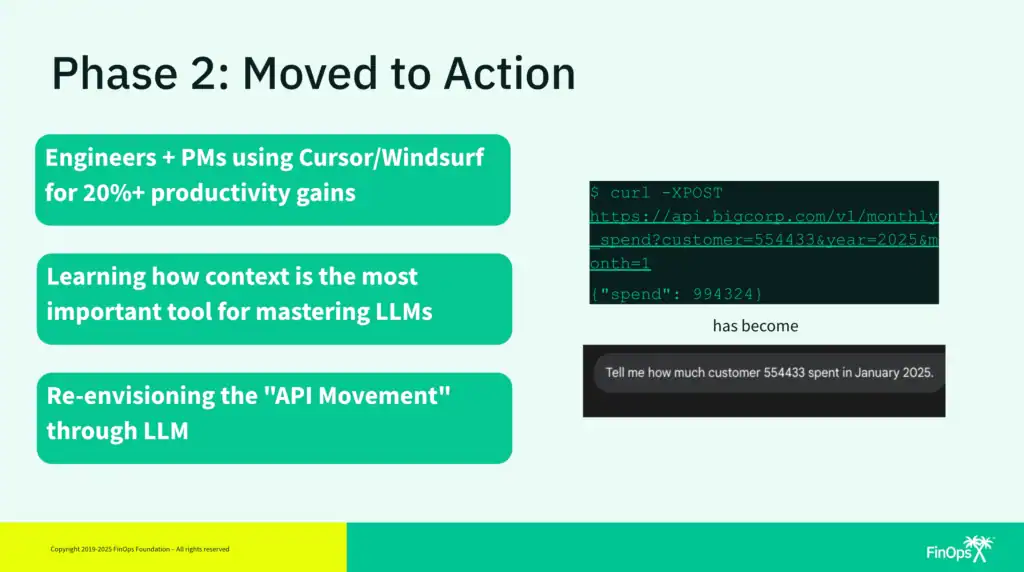

Once we realized it was time to re-evaluate LLM tools as part of our company’s AI strategy, we took a different first step than most companies. Rather than jumping straight into prototyping or brainstorming product features using LLMs, we started by applying the tools to our everyday business tasks.

As a fully remote team, we quickly found value in using Zoom meeting summaries and chat assistants. Our engineering team adopted LLM-enhanced IDEs such as Cursor and Windsurf to accelerate development and reduce everyday toil. We immediately saw wins in unit test generation, code documentation, and modest refactors. Seeing the strengths and weaknesses of these tools through everyday tasks helped us understand where we could create value in our product.

Armed with this knowledge, we were more easily able to identify key areas where LLMs could make a difference in our product, including Agents and MCP/A2A, big data analysis, and improving our machine learning models. Keep an eye out for more information about our latest innovations in this area.

It’s all just FinOps

Ternary is now at the same AI adoption phase as many organizations, but only after a healthy period of skepticism. As we transition from the R&D phase into production, we realize we’ll need to monitor how our usage of AI scales up through new vocabulary such as tokens, models, and inference. But like we heard from Mike Fuller in the Day 2 keynote, it’s all just FinOps. There’s new terminology and new risks, but we know how to handle this. We just need to establish cost visibility for our new usage, monitor it as the spend grows, and be mindful of ROI. And as newer, more efficient models become available, we should be ready to make adjustments to take full advantage of them. At Ternary, we drink our own champagne by managing our AI spend with our own platform.

We’re genuinely excited about what AI can do for our team and for our customers through the product. Stay tuned for new Ternary functionality to support FinOps for AI coming soon.

Watch the recording:

Discover how Ternary is advancing technology spend management.